Open Media Forensics Challenge

In general, there are multiple tasks in media forensic applications. For example, manipulation detection and localization, Generative Adversarial Network (GAN) detection, image splice detection and localization, event verification, camera verification, and provenance history analysis etc.

The OpenMFC initially focuses on manipulation detection and deepfake tasks. In future, challenges may be expanded with community interest. The OpenMFC 2022 has following three task categories: Manipulation Detection (MD), Deepfakes Detection (DD), and Steganography Detection (StegD).

- MD: Manipulation Detection

- DD: Deepfakes Detection

- StegD: Steganography Detection

A brief summary of each category and their tasks are described below. In the summary, the evaluation media is described in the following way: A ‘base’ indicates original media with high provenance, while a ‘probe’ indicates a test media. A ‘donor’ indicates another media whose content was donated into the base media and generated the probe media. For a full description of the evaluation tasks, please refer to the OpenMFC 2022 Evaluation Plan [Download Link].

-

Manipulation Detection (MD)

The objective for Manipulation Detection (MD) is to detect if a probe has been manipulated, and if so, to spatially localize the edits. Manipulation in this context is defined as deliberate modifications of media (e.g., splicing and cloning etc.) and localization is encouraged but not required for OpenMFC.

The MD task includes three tasks, namely,

- Image_MD (or IMD): Image Manipulation Detection

- ImageSplice_MD (or ISMD): Image Splice Manipulation Detection

- Video_MD (or VMD): Video Manipulation Detection

The Image Manipulation Detection task is to detect if the image has been manipulated, and then to spatially localize the manipulated region. For detection, the IMD system provides a confidence score for all probe (i.e., a test image) with higher numbers indicating the image is more likely to have been manipulated. The target probes (i.e., probes that should be detected as manipulated) included potentially any image manipulations while the non-target probes (i.e., probes not containing image manipulations) include only high provenance images that are known to be original. Systems are required to process and report a confidence score for every probe.

For the localization part of the task, the system provides an image bit-plane mask (either binary or greyscale) that indicates the manipulated pixels. Only local manipulations (e.g., clone) require a mask output while global manipulations (e.g., blur) affecting the entire image do not require a mask.

The new task, Image Splice Manipulation Detection, is added in the OpenMFC 2022 to support entry-level public participants. The ISMD is designed for 'splice' manipulation operation only. The testing dataset is a small-size dataset (2K images), which contains either original images without any manipulation, or spliced images. The ISMD task will detect if a probe image has been spliced.

The Video Manipulation Detection (VMD) task is to detect if the video has been manipulated. In this task, the localization of spatial/temporal-spatial manipulated regions is not addressed. For detection, the VMD system provides a confidence score for all probes (i.e, a test video) with higher numbers indicating the video is more likely to have been manipulated. For VMD, target probes (i.e., probes that should be detected as manipulated) included potentially any video manipulations while the non-target probes (i.e., probes not containing video manipulations) include only high provenance videos that are known to be original. Systems are required to process and report a confidence score for every probe.

-

Deepfakes Detection (DD)

With recent advances in DeepFakes techniques and GAN (Generative Adversarial Network), imagery producers are able to generate realistic fake objects in media. The objective for Deepfakes Detection (DD) is to detect if a probe has been Deepfakes or GAN manipulated.

The DD task includes two tasks based on testing media type, namely,

- Image_DD (or IDD): Image Deepfakes Detection The Image Deepfakes Detection task evaluates if a system can detect Deepfaked images (e.g. a face video is manipulated by a Deepfakes tool, then deepfaked face image frames are extracted as image files etc.) or GAN-manipulated images (e.g. created by a GAN model, locally/globally modified by a GAN filter/operation, etc.) specifically while not detecting other forms of manipulations. In the testing datasets, the non-target probes are high provenance images (or cropped high provenance images) and the target images are the ones manipulated with Deepfakes or GAN techniques.

- Video_DD (or VDD): Video Deepfakes Detection The Video Deepfakes Detection task evaluates if a system can detect Deepfaked videos. The target probes include Deepfaked videos while the non-target probes include high provenance original videos or video clips.

-

Steganography Detection (StegD)

- StegD: Steganography Detection The Steganography Detection task evaluates if a probe is a stego image, which contains the hidden message either in pixel values or in optimally selected coefficients. For each StegD trial, which consists of a single probe image, the StegD system must render a confidence score with higher numbers indicating the probe image is more likely to be a stego image.

All probes must be processed independently of each other within a given task and across all tasks, meaning content extracted from probe data must not affect another probe.

For the OpenMFC 2022 evaluation, all tasks should run under the following conditions:

-

Image Only (IO)

For the image tasks, the system is only allowed to use the pixel-based content for images as input to the system. No image header or other information should be used.

-

Video Only (VO)

For the video tasks, the system is only allowed to use the pixel-based content for videos and audio (if audio exists) as input. No video header or other information should be used.

For detection performance assessment, system performance is measured by Area Under Curve (AUC) which is the primary metric and the Correct Detection Rate at a False Alarm Rate of 5% (CDR@FAR = 0.05) from the Receiver Operating Characteristic (ROC) as shown Figure (a) below. This applies to both image and video tasks.

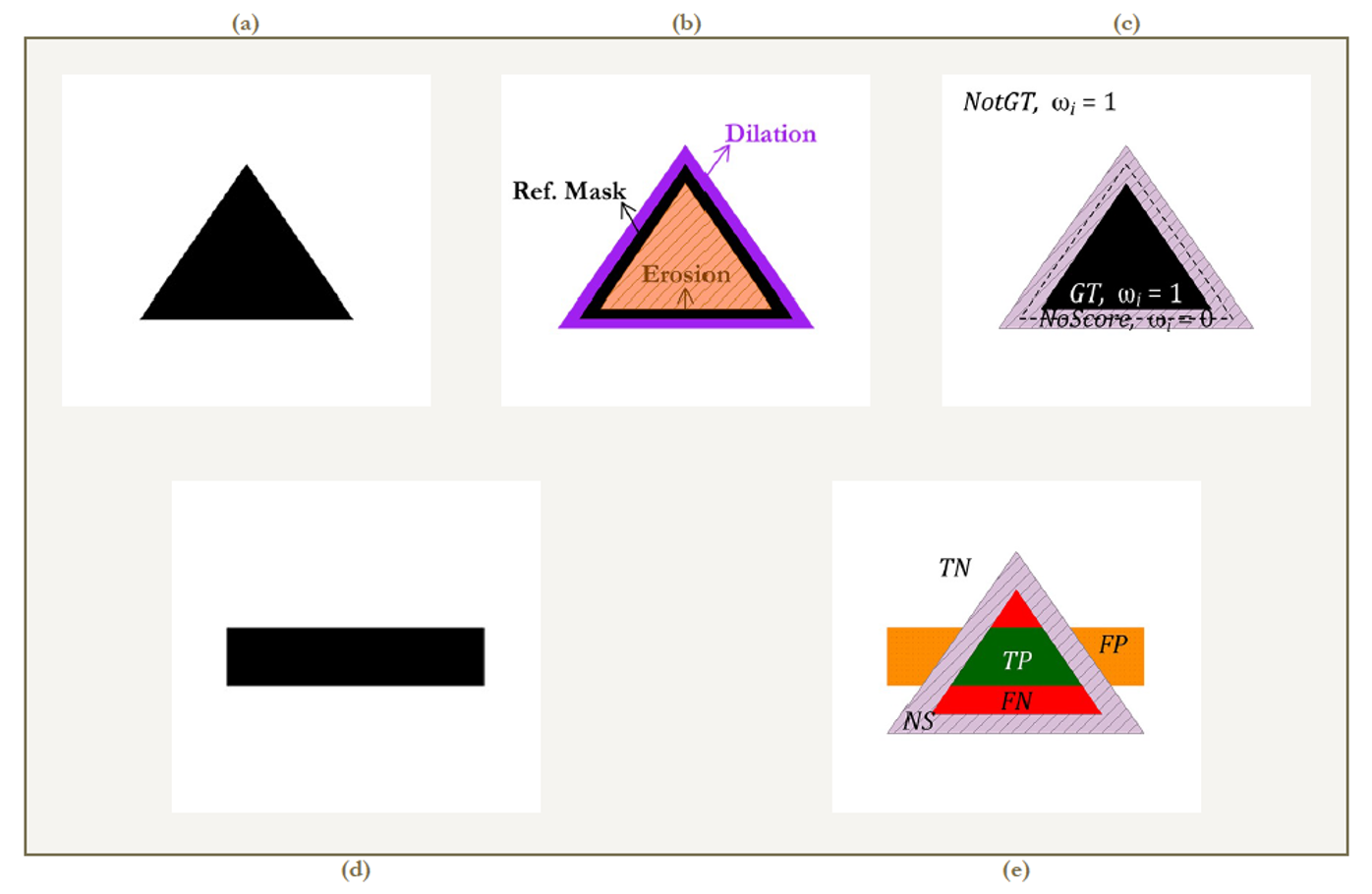

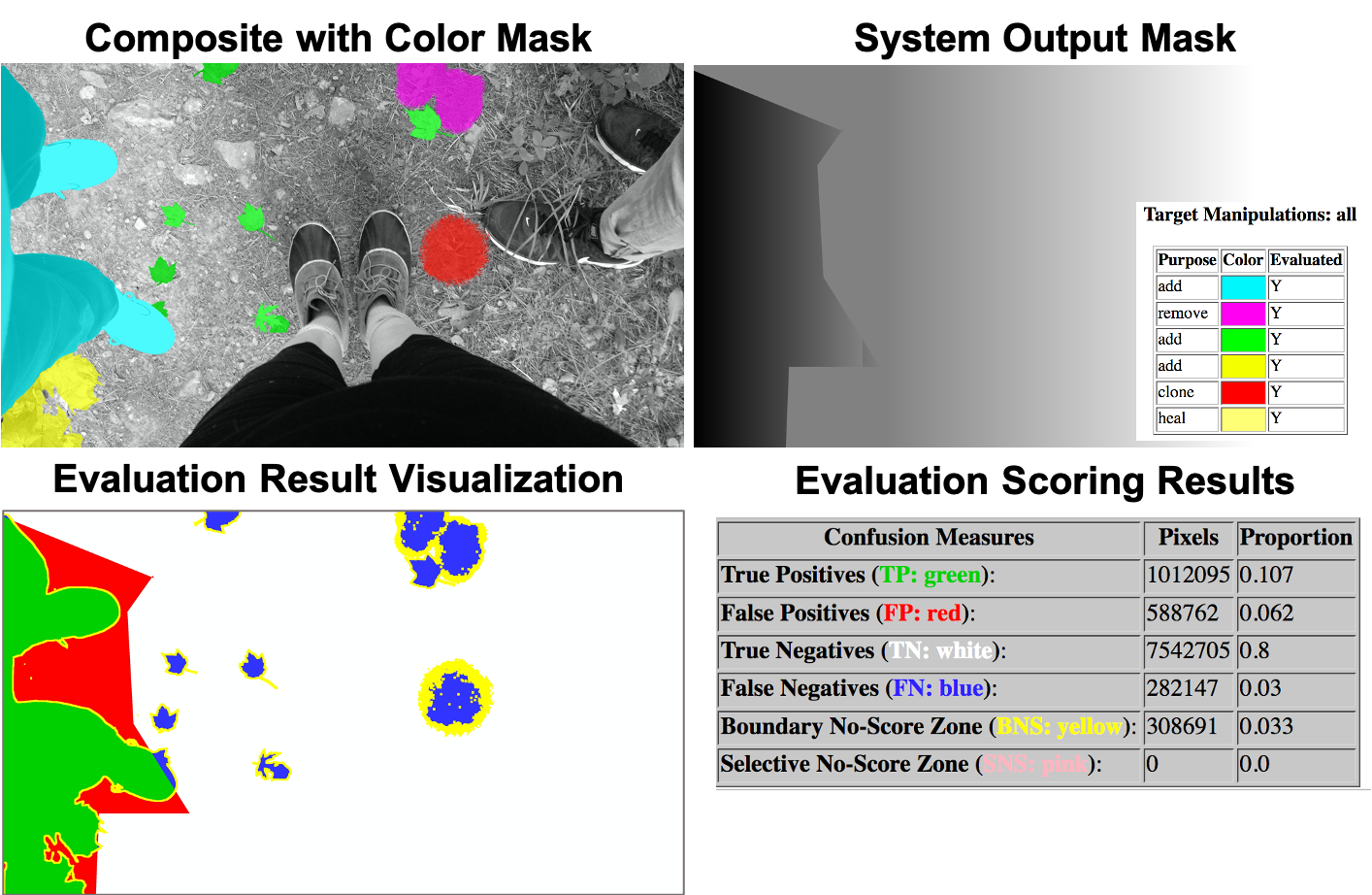

For the image localization performance assessment, the Optimum Matthews Correlation Coefficient (MCC) is the primary metric. The optimum MCC is calculated using an ideal mask-specific threshold found by computing metric scores over all pixel thresholds. Figure (b) below shows a visualization of the different mask regions used for mask image evaluations.

- TP (True Positive) is an overlap area (green) of the reference mask and system output mask as manipulated at the pixel threshold.

- FN (False Negative) is the reference mask indicates as manipulated, but the system did not detect it as manipulated at the threshold (red).

- FP (False Positive) is the reference mask indicates not-manipulated, but the system detected it as manipulated at the threshold (orange).

- TN (True Negative) is the reference mask indicates not-manipulated, and the system also detects it as not-manipulated at the threshold (white).

- NS (No-Score) is the region of the reference mask not scored, the result of the dilation and erosion operations (purple).

- If the denominator is zero, then we set MCC_o = 0.

- If MCC_o = 1, there is a perfect correlation between the reference and system output masks.

- If MCC_o = 0, there is no correlation between the reference mask and the system output mask.

- If MCC_o = -1, there is perfect anti-correlation.

Figure 1. Detection System Performance Metrics: Receiver Operating Characteristic (ROC) and Area Under the Curve (AUC)

Figure 2. Localization System Performance Metrics: Optimum Matthews Correlation Coefficient (MCC)

Figure 3. An Example of Localization System Evaluation Report

Registered participants will get access to datasets created by the DARPA Media Forensics (MediFor) Program [Website Link]. During the registration process, registrants will get the data access credentials.

There will be both development data sets (those which include reference material) and evaluation data sets (which consist of only probe images to test systems). Each data set is structured similarly as described on the “MFC Data Set Structure Summary” section below.

Manipulation Detection (MD) Task

Image Manipulation Detection (IMD): OpenMFC20_Image_MD: previous MFC19 Image Data, generated from over 700 image journals, with more than 16K test images.

Video Manipulation Detection (VMD): OpenMFC20_Video_MD: previous MFC19 Video Data, generated from over 100 video journals, with about 1K test videos.

(NEW) Image Splice Manipulation Detection (ISMD): OpenMFC22_SpliceImage_MD: about 500 test images.

Deepfake Detection (DD) Task

Image Deepfake Detection (IDD): OpenMFC20_Image_DD: previous MFC18 GAN Full Image Data, generated from over 200 image journals, with more than 1K test images.

Video Deepfake Detection (VDD): OpenMFC20_Video_DD: previous MFC18 GAN Video Data, over 100 test videos.

Steganography Detection (StegD) Task

(NEW) Steganography Detection (StegD): OpenMFC22_Image_StegD

Steganography Detection (StegD) Task

(NEW) Steganography Detection (StegD): OpenMFC22_Image_StegD

- DARPA MediFor NC16 kickoff image dataset: 1200 images, size is about 4GB.

- DARPA MediFor NC17 development dataset: 3.5K images from about 400 journals, over 200 videos from over 20 journals, total size is about 441GB.

- DARPA MediFor NC17 Evaluation Part 1 image dataset: 4K images from over 400 journals, size is about 41GB.

- DARPA MediFor NC17 Evaluation Part 1 video dataset: 360 videos from 45 journals, size is about 117GB.

- DARPA MediFor MFC18 development 1 image dataset: 5.6K images from over 177 journals, size is about 80GB.

- DARPA MediFor MFC18 development 2 video dataset: 231 videos from 36 journals, size is about 57GB.

-

Summary

NIST OpenMFC dataset is designed and used for NIST OpenMFC evaluation. The datasets include the following items:

- Original high-provenance image or video

- Manipulated image or video

- (optional) The reference ground-truth information for detection and localization

- Dataset Structure

- README.txt

- /probe - Directory of images/videos to be analyzed for specified task

- /indexes - Directory of index files indicating which images should be analyzed

- /references - Directory of reference ground-truth information. For evaluation datasets, this directory is sequestered for evaluation purposes. For resource datasets, this directory could be released to public.

-

MFC Dataset Index File

The index files are pipe-separated CSV formatted files. The index file for the Manipulation task will have the columns:

- TaskID: Detection task (e.g. "manipulation")

- ProbeFileID: Label of the probe image (e.g. NC2016_9397)

- ProbeFileName: Full filename and relative path of the probe image (e.g. /probe/NC2016_9397.jpg)

- ProbeWidth: Width of the probe image (e.g. 4032)

- ProbeHeight: Height of the probe image (e.g. 3024)

- ProbeFileSize: File size of probe (e.g. 4049990) (manipulation task only)

- HPDeviceID: Camera device ID. If "UNDEF", the data is not provided for training. (e.g. PAR-A077)

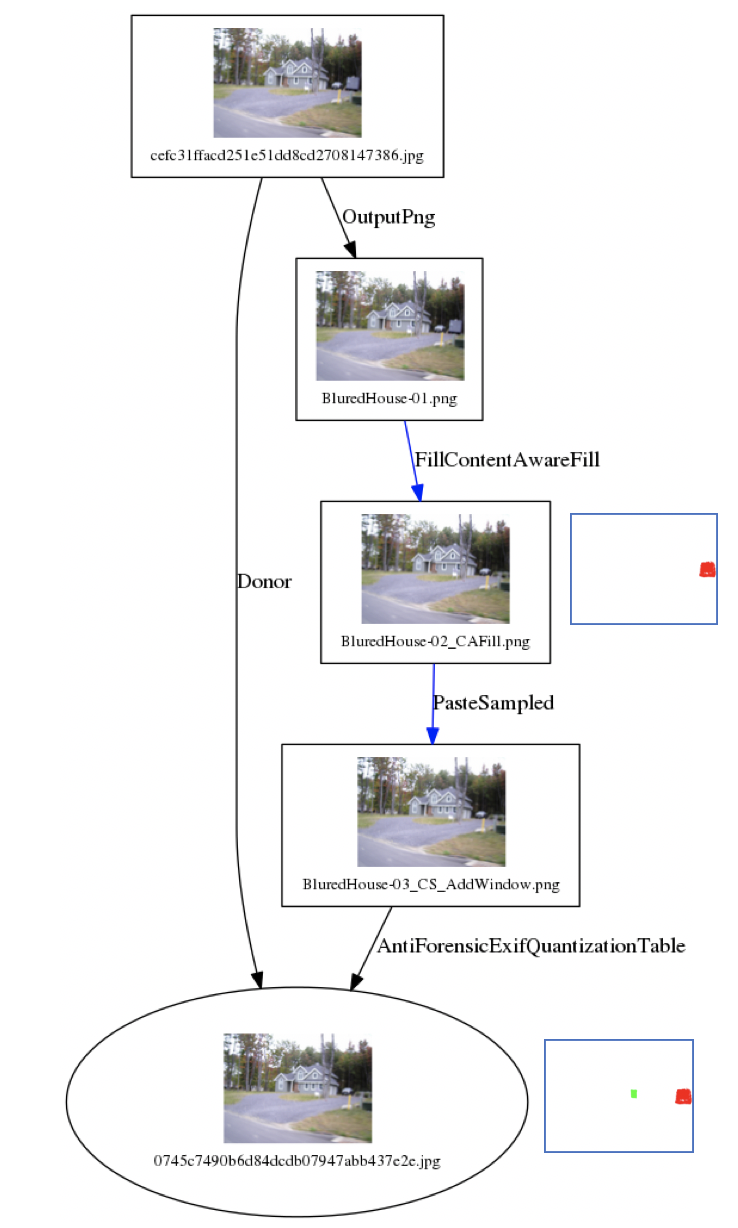

- Image Manipulation Example

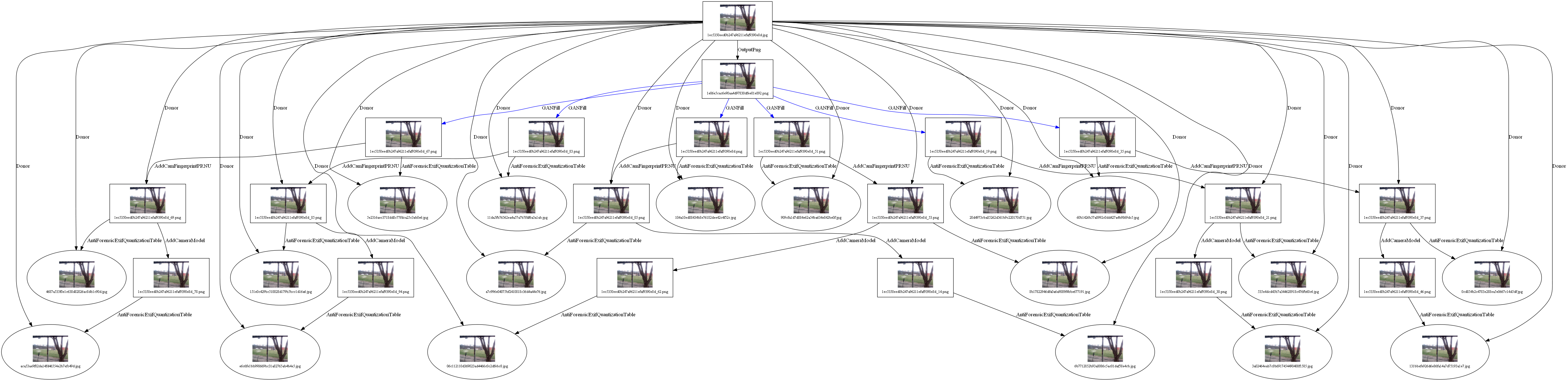

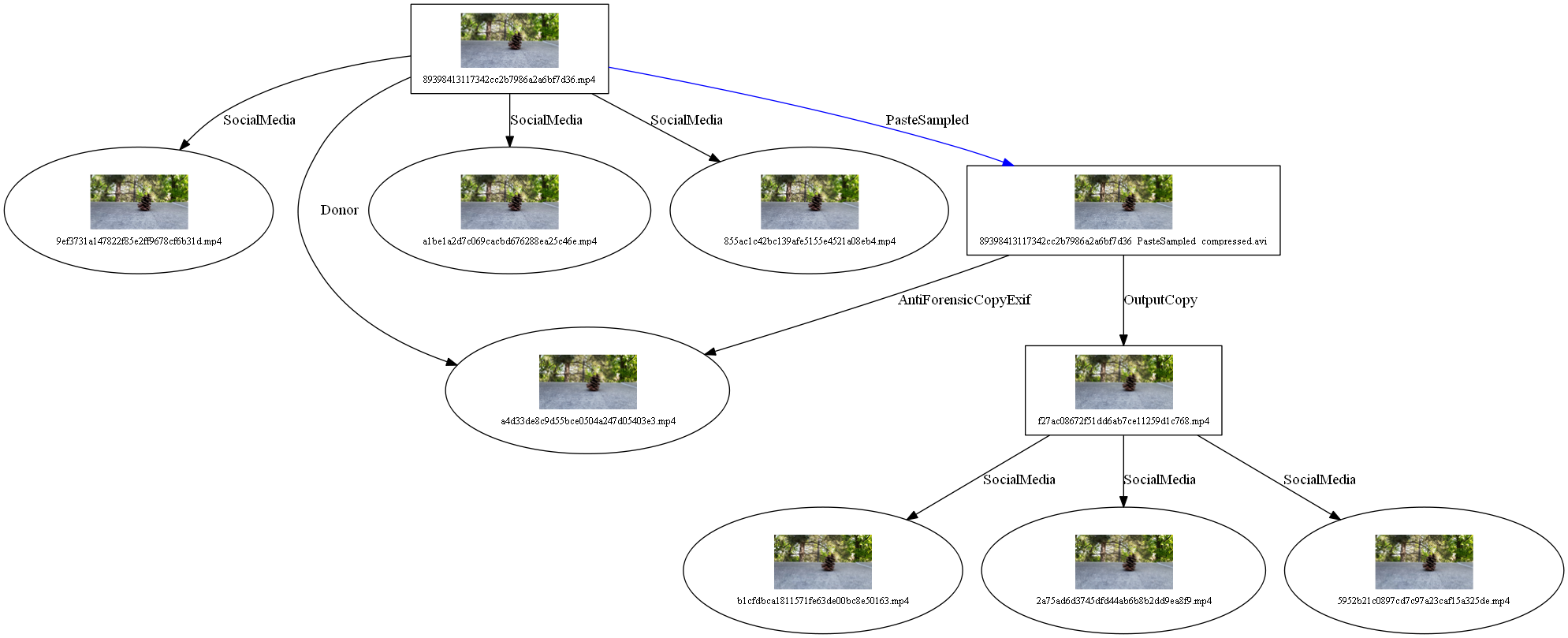

- Video Manipulation Example

- GAN Image Manipulation Example

- GAN Video Manipulation Example

| Date | Event |

|---|---|

| Nov. 14-15, 2023 | OpenMFC 2023 workshop |

| Oct. 25, 2023 | OpenMFC 2023 submission deadline |

| Dec. 6-7, 2022 | OpenMFC 2022 workshop |

| Nov. 15, 2022 | OpenMFC 2022 submission deadline |

| Aug. 1 - Aug. 30, 2022 | OpenMFC 2022 participant pre-challenge phase (QC testing) |

| July 29, 2022 | OpenMFC STEG challenge dataset available |

| July 28, 2022 | OpenMFC 2022 Leaderboard open for the next evaluation cycle |

| Jul. 26, 2022 | (New) OpenMFC dataset resource website |

| Mar. 03, 2022 | OpenMFC2022 Eval Plan available |

| Feb. 15, 2022 | OpenMFC2021 Workshop Talks and Slides available |

| Dec. 7- 10, 2021 | OpenMFC/TRECVID 2021 Virtual Workshop |

| Nov. 1, 2021 | OpenMFC 2021 Virtual Workshop agenda finalization |

| Oct. 30, 2021 | OpenMFC 2020-2021 submission deadline |

| May 15, 2021 | OpenMFC 2020-2021 submission open |

| April 23, 2021 - May 09, 2021 |

|

| August 31, 2020 | OpenMFC evaluation GAN image and video dataset available |

| August 21, 2020 | OpenMFC evaluation image and video dataset available |

| August 17, 2020 | OpenMFC development datasets resource available |

IMD-IO (Image Only)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|---|---|---|---|---|---|---|---|

| 1 | 63 | 2021-06-07 11:26:58 | Mayachitra | test1june6 | 0.993707 | 0.972 | ||

| 2 | 10 | 2020-11-05 21:53:02 | UIIA | naive-efficient | 0.616186 | 0.071351 | ||

| 3 | 67 | 2021-06-08 00:51:16 | UIIA | testIMDL | 0.5 | 0.05 | ||

| 4 | 81 | 2021-06-26 00:31:16 | UIIA | testIMDL | 0.0553688699305928 |

IMD-IM (Image and Metadata)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|

ISMD-IO (Image Only)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|

ISMD-IM (Image and Metadata)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|

VMD-IO (Video Only)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|

VMD-IM (Video and Metadata)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|

Image Deepfakes Detection (IDD)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|---|---|---|---|---|---|---|---|

| 1 | 90 | 2021-07-10 09:56:14 | UIIA | test | 0.689716 | 0.207018 | ||

| 2 | 93 | 2021-07-30 18:13:11 | UIIA | test | 0.683956 | 0.187135 | ||

| 3 | 138 | 2024-06-13 15:23:04 | DeepFake-Test | System-Test | 0.578435 | 0.059649 | ||

| 4 | 137 | 2024-06-13 15:22:57 | DeepFake-Test | System-Test | 0.578435 | 0.059649 | ||

| 5 | 136 | 2024-06-13 15:22:50 | DeepFake-Test | System-Test | 0.578435 | 0.059649 | ||

| 6 | 75 | 2021-06-14 04:12:30 | UBMDFL_IGMD | dry-run | 0.554261 | 0.012865 | ||

| 7 | 52 | 2021-06-01 15:41:20 | UBMDFL_IGMD | dry-run | 0.547125 | 0.009357 | ||

| 8 | 82 | 2021-06-23 09:21:26 | UIIA | ptchatt | 0.500033 | 0.051077 | ||

| 9 | 36 | 2021-05-06 07:32:03 | UIIA | test | 0.5 | 0.05 | ||

| 10 | 91 | 2021-07-21 15:53:47 | UIIA | test | 0.478445 | 0.009357 | ||

| 11 | 86 | 2021-07-07 06:59:01 | UIIA | test | 0.41193 | 0.00117 | ||

| 12 | 87 | 2021-07-07 07:04:14 | UIIA | test | 0.403957 | 0.004678 | ||

| 13 | 89 | 2021-07-08 04:20:47 | UIIA | test | 0.398674 | 0.003509 | ||

| 14 | 83 | 2021-06-23 11:08:50 | UIIA | ptchatt | 0.393886 | 0.025039 | ||

| 15 | 85 | 2021-07-05 16:16:58 | UIIA | test | 0.38569 | 0.0 | ||

| 16 | 84 | 2021-06-23 11:22:44 | UIIA | ptchatt | 0.377613 | 0.023884 | ||

| 17 | 78 | 2021-06-17 04:27:03 | UIIA | ptchatt | 0.366392 | 0.004678 | ||

| 18 | 92 | 2021-07-29 02:18:42 | UIIA | test | 0.363539 | 0.00117 | ||

| 19 | 88 | 2021-07-07 17:41:19 | UIIA | test | 0.359211 | 0.026901 |

Video Deepfakes Detection (VDD)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|---|---|---|---|---|---|---|---|

| 1 | 133 | 2024-06-13 11:44:37 | CERTH-ITI-MEVER | video_df_gan_detection_final | 0.817059 | 0.6 | ||

| 2 | 132 | 2024-06-13 11:23:09 | CERTH-ITI-MEVER | video_df_gan_detection | 0.813382 | 0.6 | ||

| 3 | 131 | 2022-11-15 16:45:18 | UTC 2022 | utcDDv1 | 0.512647 | 0.04 |

Image Steganography Detection (StegD)

| RANK | SUBMISSION ID | SUBMISSION DATE | TEAM NAME | SYSTEM NAME | AUC | [email protected] | ROC CURVE | AVERAGE OPTIMAL MCC |

|---|---|---|---|---|---|---|---|---|

| 1 | 129 | 2022-09-04 18:20:22 | UIIA | Testing | 0.525687 | 0.0175 | ||

| 2 | 128 | 2022-09-03 16:56:24 | UIIA | Testing | 0.524344 | 0.01 | ||

| 3 | 127 | 2022-09-02 17:01:31 | UIIA | Testing | 0.507594 | 0.0675 | ||

| 4 | 130 | 2022-09-05 04:51:33 | UIIA | Testing | 0.486156 | 0.05 |

- Participation in the OpenMFC20 evaluation is voluntary and open to all who find the task of interest and are willing and able to abide by the rules of the evaluation. To fully participate a registered site must:

- become familiar with, and abide by, all evaluation rules;

- develop/enhance an algorithm that can process the required evaluation datasets;

- submit the necessary files to NIST for scoring; and

- attend the evaluation workshop (if one occurs) and openly discuss the algorithm and related research with other evaluation participants and the evaluation coordinators.

- Participants are free to publish results for their own system but must not publicly compare their results with other participants (ranking, score differences, etc.) without explicit written consent from the other participants.

- While participants may report their own results, participants may not make advertising claims about their standing in the evaluation, regardless of rank, or winning the evaluation, or claim NIST endorsement of their system(s). The following language in the U.S. Code of Federal Regulations (15 C.F.R. § 200.113)14 shall be respected: NIST does not approve, recommend, or endorse any proprietary product or proprietary material. No reference shall be made to NIST, or to reports or results furnished by NIST in any advertising or sales promotion which would indicate or imply that NIST approves, recommends, or endorses any proprietary product or proprietary material, or which has as its purpose an intent to cause directly or indirectly the advertised product to be used or purchased because of NIST test reports or results.

- At the conclusion of the evaluation, NIST may generate a report summarizing the system results for conditions of interest. Participants may publish or otherwise disseminate these charts, unaltered and with appropriate reference to their source.

- During the OpenMFC20 evaluation, a maximum of ten system slots per task can be created. There are no limits on the number of submissions per system slot.

- The challenge participant can train their systems or tune parameters using any data complying with applicable laws and regulations.

- The challenge participant agrees not to probe the test images/videos via manual/human means such as looking at the media to produce the manipulations from prior to the evaluation period to end of leaderboard evaluation.

- All machine learning or statistical analysis algorithms must complete training, model selection, and tuning prior to running on the test data. This rule does not preclude online learning/adaptation during test data processing so long as the adaptation information is not reused for subsequent runs of the evaluation collection.

- preparing a system description and self-validating system outputs

- packaging system outputs and system descriptions

- uploading data to a publicly accessible webserver

- transmitting the url in form of a task-submission using this website

-

System Descriptions

Documenting each system is vital to interpreting evaluation results. As such, each submitted system, determined by unique submission identifiers, must be accompanied by Submission Identifier(s), System Description, OptOut Criteria, System Hardware Description and Runtime Computation, Training Data and Knowledge Sources, and References.

-

Packaging Submissions

Using the <SubID> (a team-defined label for the system submission, witout spaces or special characters), all system output submissions must be formatted according to the following directory structure:

<SubID>/

<SubID>.txt The system description file, described in Appendix A-a

<SubID>.csv The system output file

/mask The system output mask directory

{MaskFileName1}.png The system output mask file directory

As an example, if the team is submitting <SubID> baseline_3, their directory would be:

baseline_3/

baseline_3.txt

baseline_3.csv

/mask

Next, build a zip or tar file of your submission and post the file on a web-accessible URL that does not require user/password credentials.

-

Transmitting Submissions

Make your submission using the OpenMFC Web site. To so, follow these steps:

- Navigate to your “Dashboard”

- Under “Submission Management”, click the task to submit to.

- Add a new “System” or use an existing system.

- Click on “Upload”

- Fill in the form and click “Submit”

Validator - Single/Double Source Detection Validator

DetectionScorer - Single/Double Source Detection Evaluation Scorer

MaskScorer - Single/Double Source Mask Evaluation (Localization) Scorer

ProvenanceFilteringScorer - Scorer for Provenance Filtering

ProvenanceGraphBuildingScorer - Scorer for Provenance Graph Building

VideoTemporalLocalisationScoer - Scorer for Video Temporal Localization

The MediScorer package git repository is available here. OpenMFC only needs Validator, DetectionScorer, and MaskScorer.

You can download the Journaling Tool package from Github.

- Dartmouth Camera Originals

- 4 million original images with intact metadata collected from 4000+ imaging devices

- Dartmouth Rebroadcast Dataset

- 14, 500 rebroadcast images captured from a diverse set of devices: 234 displays, 173 scanners, 282 printers, and 180 recapture cameras.

- Paper Download Link

-

UAlbany Clone and Splice Dataset

- 100+ images with region duplication and splicing

- Dataset Download Link

-

Celeb-DF

- Dataset for DeepFake Forensics

- v1: 590 original YouTube videos and 5639 corresponding DeepFake videos, Paper Download Link

- v2: 795 DeepFake videos, Website Link

- Dataset Link

-

UAlbany BIspectrum Voice Analysis Dataset

- Audio containing human voices was extracted from a collection of YouTube videos.

- Dataset Download Link (DAPRA MediFor Team Only)

-

UMD Face Swap Dataset

- Dataset of tampered faces created by swapping one face with another using multiple face-swapping apps

- Dataset Download Link

-

Purdue Scanner Forensics Dataset

- Dataset contains scanned images and documents from total 23 different device out of 20 scanner models, can be used for PRNU analysis

- Dataset Download Link

-

Purdue Dataset used for scientific integrity

- Dataset contains 533 retracted papers and 117 suspected papers from 298 authors

- Dataset Download Link

-

UNIFI VISION Dataset

- Dataset of more than 35000 images and videos captured using 35 different portable devices of 11 major brands

- Dataset Download Link

-

UNIFI HDR Dataset

- Dataset of more than 5000 SDR and HDR images captured using 23 different mobile devices of 7 major brands

- Dataset Download Link

-

UNIFI EVA-7K Dataset

- Dataset of 7000 videos: native, altered (FFmpeg, AVIdemux, ...) and exchanged through social platforms (Tiktok, Weibo, ...)

- Dataset Download Link

-

Notre Dame Reddit Provenance Dataset

- Image provenance graphs automatically generated from image content posted to the online Reddit community known as Photoshop battles.

- Reddit dataset contains 184 provenance graphs, which together sum up to 10,421 original and composite images.

- Paper Download Link

- Dataset Download Link

-

GAN Collection

- Dataset is a collection of about 356,000 real images and 596,000 GAN-generated images. The GAN-generated images are created by different GAN architectures (such as: CycleGAN, ProGAN, StyleGAN).

- Dataset Download Link

-

FaceForensics++

- Dataset contains 1000 original videos and 4000 forged ones (with 4 different manipulations: Face2Face, FaceSwap, Deepfakes, NeuralTexture).

- Dataset Download Link

-

UC Berkeley Audio-visual synchronization Dataset

- AudioSet, Website Link

- Download the videos from YouTube, using the times specified by the paper https://research.google.com/audioset/.

- Dataset Size: the raw videos are about 8TB.

-

UC Berkeley CNN Detector via ProGan Dataset

- ProGAN images were synthesized newly for each training epoch

- Download Link in Kitware shared drive

-

UA_DFV (UAlbany FaceWarp/FWA_Dataset)

- The dataset contains 49 real and 49 fake videos.

- Download Link

-

Celeb-DF

- A New Dataset for DeepFake Forensics.

- v1: 590 original YouTube videos and 5639 corresponding DeepFake videos, Paper https://arxiv.org/abs/1909.12962

- v2: 795 DeepFake videos

- Download Link

- Github Link

-

Video Frame Duplication Dataset

- Subset of MFC18 Video Dev3

- The training part is similar to the frame drop model to distinguish selective frames and duplicated frames. We can use the frame drop model here directly.

- DAPRA MediFor Team Only

-

Holistic image MFC18 dataset

- Subset of MFC18 Image Dev1

- DAPRA MediFor Team Only

-

Columbia Image Splicing Detection Evaluation Dataset

- 1845 image blocks extracted from images in CalPhotos collection, with a fixed size of 128 pixels x 128 pixels.

- Website Link

- Dresden Image Database

- REWIND Datasets

- RAISE Dataset - A Raw Image Dataset for Digital Image Forensics

- CASIA Tampered Image Detection Evaluation Datasets

- FAU-Erlangen Image Manipulation Datasets

- UCID Uncompressed Color Image Datasets

- SUN Database

- INRIA Copy Days

-

Realistic Tampering Dataset

- This dataset contains 220 realistic forgeries created by hand in modern photo-editing software (GIMP and Affinity Photo) and covers various challenging tampering scenarios involving both object insertion and removal.

- Website Link

-

BOSSbase 1.01

- 10,000 never-compressed 512x512 grayscale images

- Paper Link

- Dataset Download Link

-

GRIP copy-move database

- Dataset contains 80 pristine images and 80 forged images with rigid copy-move, possibility to generate other copy-moves with rescaling, rotation, compression and noise.

- Paper Link

- Dataset Download Link

-

DEFACTO database

- Dataset contains over 200,000 forged images with different kind of manipulations.

- Paper Link

- Dataset Download Link

-

IMD2020

- Dataset contains both synthetic manipulations and real ones (around 37,000).

- Paper Link

- Dataset Download Link

-

DeeperForensics-1.0

- Dataset contains 48,475 source videos and 11,000 fake videos with face manipulations.

- Paper Link

- Dataset Download Link

-

DeepFake Detection Dataset (DFD) - Google

- Dataset contains 363 pristine videos and 3068 fake videos with face manipulations.

- Information Link

- Dataset Download Link

-

DeepFake Detection Challenge Dataset (DFDC) – Facebook

- Dataset contains 19,154 pristine videos and 100,000 fake videos with face manipulations..

- Paper Link

- Dataset Download Link

- Haiying Guan, Andrew Zhang, and Jim Horan, Guardians of Forensic Evidence: Evaluating Analytic Systems Against AI-Generated Deepfakes, in Accelerating Forensic Innovation for Impact (AFI2) Competition Section, Forensics@NIST symposium, Nov. 20, 2024. [Paper Download Link]

- Haiying Guan, NIST Open Media Forensics Challenge, Briefing for NITRD Information Integrity R&D Interagency Working Group (IIRD IWG), Sept.22, 2023. [Paper Download Link]

- Haiying Guan, Yooyoung Lee, and Lukas Diduch, Open Media Forensics Challenge 2022 Evaluation Plan. [Paper Download Link]

- Haiying Guan, Yooyoung Lee, Lukas Diduch, Ilia Ghorbanian, and Jim Horan, Open Media Forensics Challenge (OpenMFC) 2021 Workshop Presentation Slides. [Paper Download Link]

- Haiying Guan, Yooyoung Lee, Lukas Diduch, Jesse Zhang, Ilia Ghorbanian, Timothee Kheyrkhah, Peter Fontana, Jonathan Fiscus and James J Filliben, Open Media Forensics Challenge (OpenMFC) 2020-2021: Past, Present, and Future, NIST IR 8396. [Paper Download Link]

- Haiying Guan, Andrew Delgado, Yooyoung Lee, Amy N. Yates, Daniel Zhou, Timothee Kheyrkhah, and Jonathan Fiscus, User Guide for NIST Media Forensic Challenge (MFC) Datasets, NIST Interagency/Internal Report (NISTIR) Number 8377, July 2021. [Paper Download Link]

- Haiying Guan, Andrew Delgado, Yooyoung Lee, Amy N. Yates, Daniel Zhou, Timothee Kheyrkhah, and Jonathan Fiscus, User Guide for NIST Media Forensic Challenge (MFC) Datasets, NIST Interagency/Internal Report (NISTIR) Number 8377, July 2021. [Paper Download Link]

- A. N. Yates, H. Guan, Y. Lee, A. Delgado, T. Kheyrkhah and J. Fiscus, "Open Media Forensics Challenge 2020 Evaluation Plan," September 2020. [Paper Download Link]

- J. Fiscus, H. Guan, Y. Lee, A. N. Yates, A. Delgado, D. Zhou, T. Kheyrkhah, "Open Media Forensics Challenge 2020 - DARPA MediFor Demo Day Presentation," August 2020. [Paper Download Link]

- J. Fiscus, H. Guan, Y. Lee, A. N. Yates, A. Delgado, D. Zhou, T. Kheyrkhah, and Xiongnan Jin, "NIST Media Forensic Challenge (MFC) Evaluation 2020 - 4th Year DARPA MediFor PI meeting," July 2020. [Paper Download Link]

- J. Fiscus, H. Guan, "Media Forensics Challenge Evaluation Overview Talk on ARO Sponsored Workshop on Assured Autonomy," June 2020. [Paper Download Link]

- H. Guan, M. Kozak, E. Robertson, Y. Lee, A. N. Yates, A. Delgado, D. Zhou, T. Kheyrkhah, J. Smith and J. Fiscus, "MFC Datasets: Large-Scale Benchmark Datasets for Media Forensic Challenge Evaluation," 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), pp. 63-72, 2019. [Paper Download Link]

- E. Robertson, H. Guan, M. Kozak, Y. Lee, A. N. Yates, A. P. Delgado, D. F. Zhou, T. N. Kheyrkhah, J. Smith and J. G. Fiscus, "Manipulation Data Collection and Annotation Tool for Media Forensics," IEEE Computer Vision and Pattern Recognition conference 2019, Workshop on Applications of Computer Vision and Pattern Recognition to Media Forensics, 2019. [Paper Download Link]